We found most of the user searching for the crawling issues where Googlebot can’t access that’s why we wanted to share this article to help them, since experiences can be the best way to learn. To know more about its definition here’s Google answers: access denied errors. This kind of issues must be noted and give solution immediately since it can lead to a serious effect on your website if you are going to take it for granted.

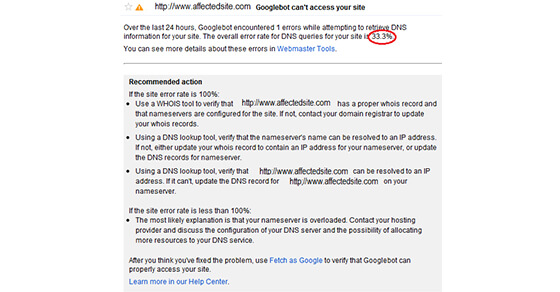

Using the Google webmaster tool I received a massage (see the image below).

What to do for this:

1. Read the notification and look at the overall error rate for DNS queries of the site.

Is it 100% or below? If you see the site has an overall error rate of 30% or something, so what’s next?

2. Read the recommended action for your error rate.

Since according to Google webmaster on their post about from crawl error alerts, the reason are the following:

- Either down or misconfiguration in DNS server

- Firewall off on your web server

- Refusing connection of your server from Googlebot

- Server is either down or overloaded

- Inaccessible site’s robot.txt

If you found it less than 100%, then you should try to consider the possibilities of checking the web server if it’s down or overloaded. Where in one of the recommended action to take is to contact the hosting provider and discuss or ask about this configuration issues in order that you can also talk about the possibility of allocation of more resources for the DNS service.

3. Double check the real cause or do further investigation.

Most of the case it was an overloading issue.

What if it’s a hundred percent you’ll just need to follow the recommendations given by Google just see the above image or simply do the following:

- Checking of the DNS setting

- Check if your hosting account is verified or active

4. Do possible actions immediately.

Before to contact the hosting service, you should started to remove or lessen those insignificant plug-ins and test if can solve the issue but if not you will immediately connect with the hosting provider.

5. Check if the solution found is effective through using Fetch as Google to verify if Googlebot can now access you’re site.

Luckily with just removing those insignificant plug-ins and check again the webmaster tool to fetch as Google the website can now be accessed by Googlebot. But if you if you think that that plug-ins are still useful for you all you need to do is contact your hosting provider in order that you can talk about allocation of more resources for DNS.

An important reminder if you’re familiar about this and you have received this kind of notice it’s always an effective option to contact your hosting provider since they know if it’s their fault or it’s on your own, before contacting just make sure that your site is truly down, before checking make sure that you clear the cache and cookies.

Also take note if you’re continually getting high rate of DNS error, you must immediately talk to your hosting provider. It’s good if they can fix it immediately but what if not, just simply make a decision or it’s time to think about this: “maybe it’s time to change your hosting provider“.

For More Details you can contact our support team at support@seohunkinternational.com or you can call us on the number given on the website.